How I Built an AI Agent for my Portfolio (Yabasha.dev) using Laravel & Next.js

I built an autonomous "Senior Editor" agent to run my portfolio. A deep dive into using Laravel 12, Next.js 15, and OpenRouter to benchmark AI models in real-time and automate the friction of SEO and content metadata.

Bashar AyyashDecember 19, 20252 min read330 words

As a Tech Lead and AI Engineer, I often tell my colleagues: "The cobbler's children have no shoes." We build sophisticated, high-performance systems for others, but our own portfolio sites often gather dust, lacking the polish we demand in our professional work.

I decided to fix this for my own platform, Yabasha.dev. I didn't just want a blog; I wanted a living playground for my expertise in AI Agents and Full-Stack Architecture.

So, I built an AI Agent to be my "Senior Editor" and "SEO Manager," automating the tedious parts of content creation so I could focus on writing. Here is a deep dive into how I built it using Laravel, Next.js, and OpenRouter.

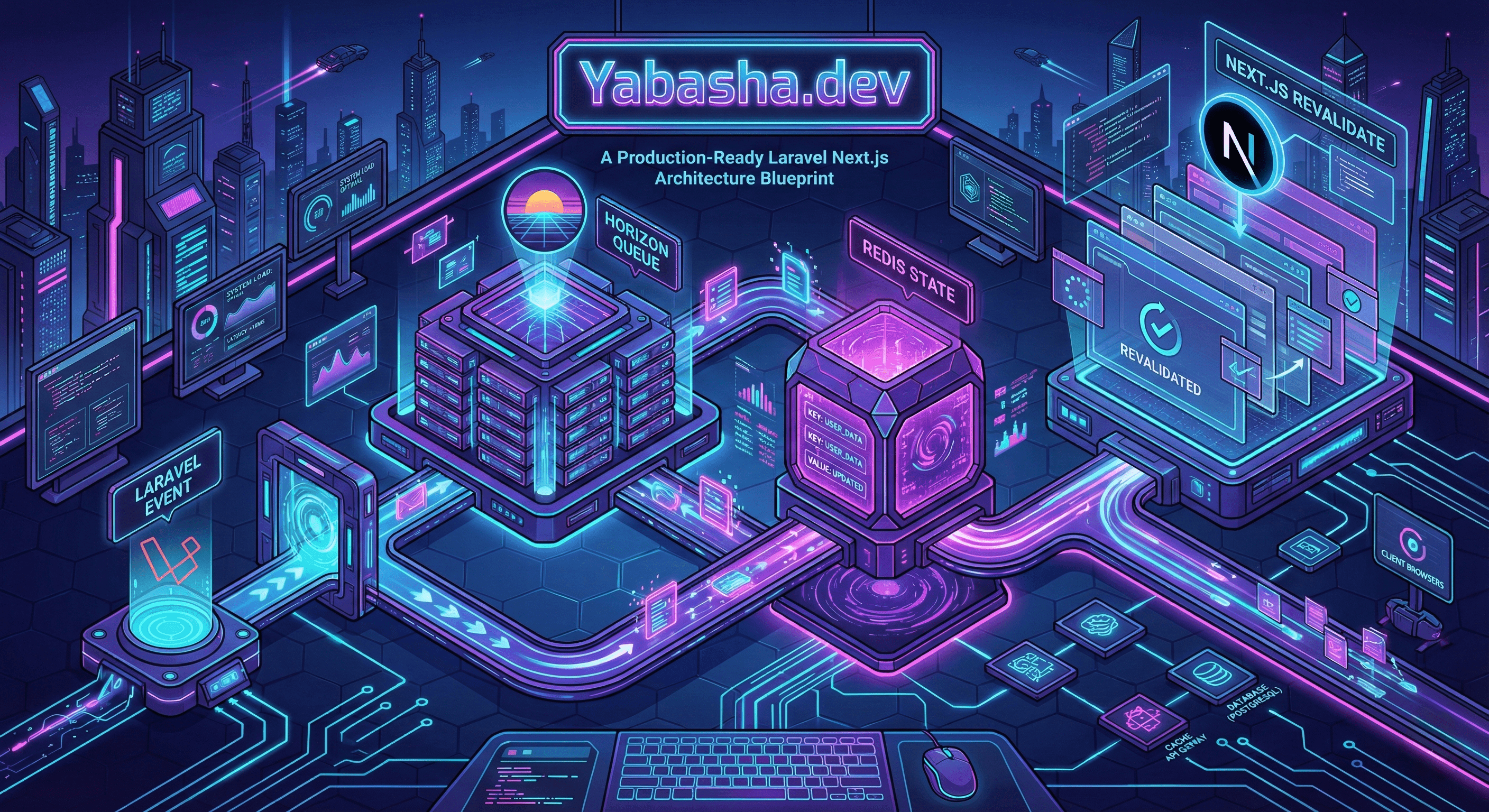

The Architecture: Hybrid Power

I chose a hybrid stack that leverages the strengths of two ecosystems:

- Backend: Laravel 12 (API, Admin Panel via Filament).

- Frontend: Next.js 15 (Static Generation, React Server Components).

- AI Gateway: OpenRouter (Unified API for OpenAI, Anthropic, Google, and Meta models).

This setup gives me the developer experience and stability of Laravel for data management, while delivering the blazing-fast performance of Next.js for the end user.

The Problem: Friction

Writing a technical article is only 50% of the work. The other 50% is:

- Writing a compelling meta description.

- Figuring out the primary and secondary SEO keywords.

- Generating Open Graph (OG) tags so it looks good on Twitter/LinkedIn.

- Categorizing the content.

- estimating reading time and difficulty level.

This friction often prevented me from hitting "Publish".

The Solution: The "Editor" Agent

I created a background service in Laravel (

PostAiService) that acts as an autonomous agent. It doesn't just "summarize" text; it analyzes it with a specific persona.

1. The Brain

The core logic resides in a dedicated service that constructs a complex prompt. I instruct the LLM to act as an "Expert SEO Specialist and Content Editor".

Here is a simplified look at the prompt structure I use:

// app/Services/PostAiService.php

protected function buildAnalysisPrompt(string $content): string

{

return <<<EOT

ActasanexpertSEOspecialistandcontenteditor.

AnalyzethefollowingblogpostcontentandreturnaJSONobjectwith:

1.`summary_short`:Aconcise1-2sentencehook.

2.`level`:'beginner','intermediate',or'advanced'.

3.`intent`:'guide','opinion','case_study',etc.

4.`og_title`:AcatchyOpenGraphtitleoptimization.

5.`primary_keyword`:ThesinglemostimportantSEOkeyword.

6.`secondary_keywords`:Anarrayofsupportingkeywords.

Content:

{$safeContent}

EOT;

}

This ensures that every single article I write has structured, machine-readable metadata that Next.js can easily ingest.

2. The Marketplace of Models

One of the coolest features I built is a dynamic model selector. The AI landscape changes weekly. One week GPT-5.2 is king, the next Claude Opus 4.5 takes the crown, or Gemini 3 Pro offers 2M token context.

I didn't want to hardcode a model.

I built an

AiModel resource in Filament that tracks available models from OpenRouter. But I went a step further—I implemented a

Benchmarking System

.

// app/Services/OpenRouterService.php

public function benchmarkModel(AiModel $model): ?float

{

// We fire a standard test prompt to measure real-world speed

$startTime = microtime(true);

$response = Http::withToken($this->apiKey)

->post($this->baseUrl.'/chat/completions', [

'model' => $model->request_id,

'messages' => [['role' => 'user', 'content' => 'Count from 1 to 10.']],

]);

// Calculate tokens per second (TPS)

// ...

$model->update(['actual_speed' => $tps]);

}

In my admin panel, I can sort models by Cost and Speed (Tokens/Sec) and toggle the active model for my agent instantly. If a new, cheaper model comes out, I add it, benchmark it, and switch my agent to use it.

The Result: Zero-Friction Publishing

Now, my workflow is simple:

- I write a rough draft in Markdown.

- I save it.

- The

PostObserverdetects the change and queues a job. - My AI Agent wakes up, reads my draft, and fills in all the SEO metadata, summaries, and categorization.

- Next.js rebuilds the page, pulling this rich metadata into the

<head>of the document.

The result is a perfectly optimized page on Yabasha.dev without me spending a single second on "optimization."

Conclusion

AI isn't about replacing engineers; it's about amplifying them. By building this agent, I've removed the administrative burden of blogging, allowing me to focus entirely on sharing knowledge.

If you're interested in building similar AI-driven architectures or need a senior pair of hands on your Next.js/Laravel stack, feel free to reach out or check out my work at Yabasha.dev.

Tagged with:

AUTHOR

Bashar Ayyash (Yabasha)

AI Engineer & Full-Stack Tech Lead

Expertise: 20+ years full-stack development. Specializing in architecting cognitive systems, RAG architectures, and scalable web platforms for the MENA region.

Related Articles

The Tailwind Tsunami: How a CSS Framework's Collapse Signals the End of Software Development as We Knew It

January 25, 2026•12 min

A Production-Ready Laravel Next.js Architecture Blueprint

January 5, 2026•6 min

The Future Is Hybrid: The Rise of the AI Engineer and Full-Stack Developer

December 10, 2025•2 min

Next.js 14: Server Components and App Router

December 9, 2025•1 min